A RAID array is a collection of disks that are configured either by software or hardware in a certain way to protect data, enhance performance, or both. The term RAID stands for a redundant array of independent disks. There are many different types of RAID arrays which affect read and write speeds as well as redundancy or fault tolerance.

RAID 6, like RAID 5, was also developed in the 1980's. A RAID 6 array built with a hardware controller is often used as a good compromise between redundancy and speed. A RAID 6 array requires at least four disks and offers increased read speeds with a minimal impact to write performance. This RAID level can tolerate two disk failures.

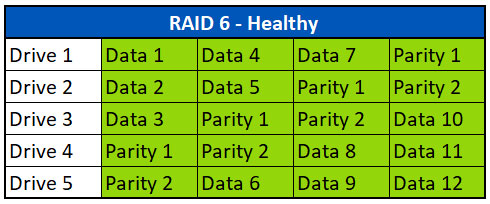

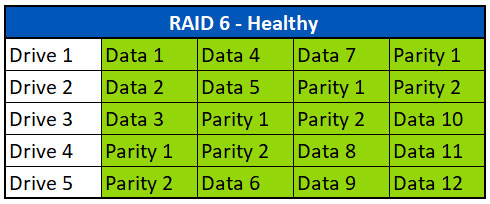

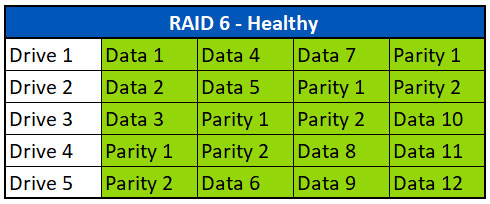

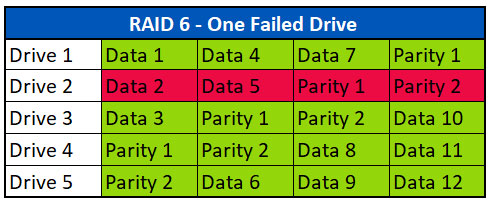

What does a RAID 6 configuration look like?

RAID 6 is similar to RAID 5 in that both arrays use parity and data striping. The difference is where RAID 5 has one instance of parity, RAID 6 has two parity stripes. This allows a RAID 6 array to withstand two drive failures rather than just one. The data contained in the first parity stripe in most RAID 6 configurations is an XOR of the data from the other stripes, the second parity stripe is typically a proprietary algorithm.

RAID 6 arrays will need to dedicate two disks worth of data to parity. This means a RAID 6 array is still cheaper to implement than a RAID 10 of the same size as only two drives worth of space is allocated to parity. Raid 6 also allows more flexibility and greater volume sizes than a RAID 1.

In the example above, we have a five drive RAID 6 array like you would see in a Dell PowerEdge server. The first parity block (Parity 1) found on Drive 4 for the first stripe, is the XOR of the data from the blocks named Data 1 (Drive 1), Data 2 (Drive 2), and Data 3 (Drive 3).

The second parity block (Parity 2) found on Drive 5 for the first stripe, is a combination of the data from Data1, Data2, Data 3 and can include Parity 1 depending on the manufacturer and controller. These parity calculations are repeated across all the data stripes using different drive combinations.

What does parity do in a RAID 6 array?

Parity is a mathematical feature that provides additional protection because it allows for the reconstruction of lost data. Having a block of parity as part of every data stripe allows the system to rebuild in the event one or more of the drives fails or goes offline. The RAID controller or RAID software can virtually rebuild any missing data segment by using parity.

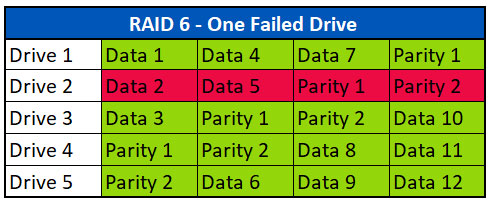

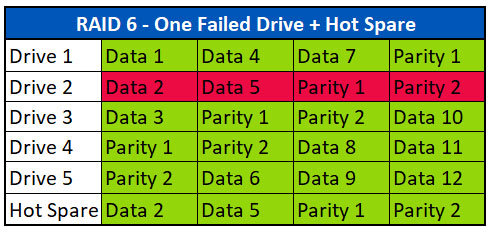

In the example below, we see that Drive 2 failed.

Upon losing a drive, the array will go into a degraded mode. In degraded mode, the RAID controller will combine the data stripes with parity as needed to present good data to the operating system. In our example, the controller will combine Data 1, Data 3 and Parity 1 for the first stripe to replace the missing data in Data 2. In the second stripe, Data 4, Data 6 and Parity 1 are used to replace Data 5. In the third and fourth stripes, no parity is needed as all the data drives are present.

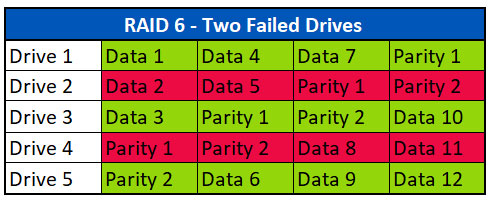

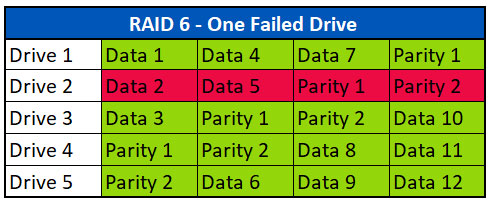

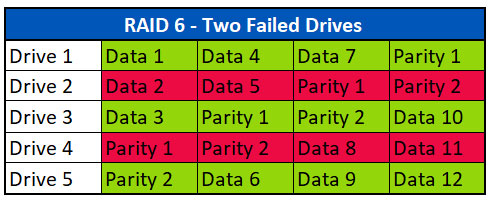

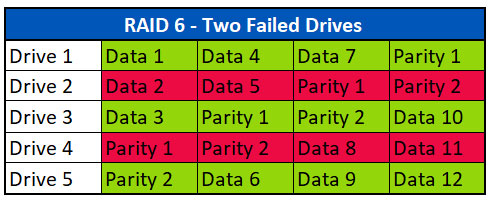

With two parity blocks per stripe, RAID 6 allows for two drives to fail. In the example below, we see that Drives 2 and 4 have failed.

Upon losing two drives, the controller will use the data stripes combined with Parity 1 and Parity 2 to recreate the missing data. In our example, the controller will combine Data 1, Data 3 and Parity 2 for the first stripe to replace the missing data in Data 2. In the second stripe, Data 4, Data 6 and Parity 1 are used to replace Data 5. In the third stripe, Data 7, Data 9 and Parity 2 are used to replace Data 8.

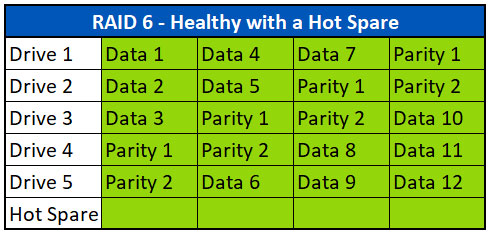

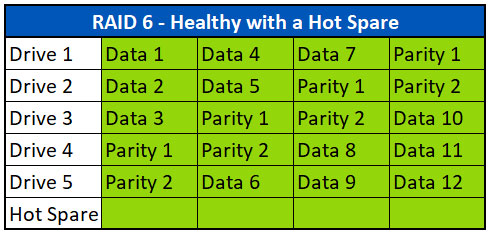

How does a Hot Spare work in a RAID 6 array?

A hot spare is one or more additional drives that can be added to a RAID 6 array to allow for fast recovery in the event of a failed drive. In the above example, we see a healthy RAID 6 array with a single hot spare added. Note that the hot spare does not contain any data until a failure occurs and the drive is needed.

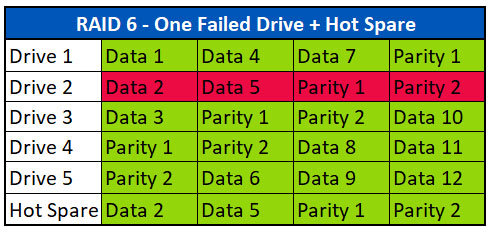

If a hot spare is available to the system, the controller will automatically begin rebuilding the missing data from the failed drive to the hot spare in the event of a failure.

The example above shows Drive 2 failed. The system used the hot spare and rebuilt all the missing data from Drive 2 on to the Hot Spare. Drive 2 can now be removed from the system and replaced with a new drive, either as a new hot spare or as a new Drive 2 and the data can be rebuilt on it.

Why use Hot Spares? When a drive fails, time is of the essence in rebuilding. Running in degraded mode puts additional stress on the remaining drives and can cause additional failures if not corrected quickly. It is also possible that drives from a similar manufacturing lot will have similar defects, so it is possible that others may fail soon. Having one or more hot spares available allows for quicker recovery times.

What causes failures in RAID 6 arrays?

There are several reasons why a RAID 6 array might fail. Here are several of the leading causes we see at Ontrack.

- Multiple disk failures

- Power issues (power spike or low voltage)

- RAID controller or RAID software failure

- RAID corruption (including logical corruption)

- Flood/Water or Fire Damage

- Failed or Partial Rebuilds

Is data recovery from RAID 6 possible?

Data recovery is possible from a RAID 6 array. While data recovery can be complex and more challenging with a RAID 6 array, it generally ends successfully. The biggest challenge is often the proprietary algorithm used to create the second parity block as each manufacturer implements this differently, and custom development is often needed to research and develop tools to support this.

There are several reasons for data loss and the recovery effort for each of them is different. A few examples are below:

Data Recovery with one failed drive

Like a RAID 5 array, if one drive fails in an array, parity can be used to rebuild the missing data. In this scenario, Ontrack is usually able to recover 100% of the data. Upon receipt of a non-functional array, all of the drives from the array are imaged in the clean room (including the failed drive if possible). Then the array is virtually rebuilt using those images. Once the RAID is assembled, the file system or volume is scanned for corruption, virtually repaired and the data extracted. The failed drive is often not needed as any missing data stripes can be rebuilt from parity.

Data Recovery from two failed drives

Unlike the RAID 5 array where all but one of the drives are needed for it to function, RAID 6 is designed to allow for the failure of up to two disks without any impact on the data. The process to recover from multiple failed drives is similar to a single drive failure. Upon receipt of a non-functional array, the drives are imaged in the clean room, including the failed drives. If the data on the drives is up to date, the failed drives may not be needed to get a full recovery of the array. Then the array is virtually rebuilt using those images.

In the above example, Data 1, Data 3 and Parity 2 from stripe one is used to rebuild Data 2. Data 4, Parity 1 and Data 6 are used to rebuild Data 5 in the second stripe. Data 7, Parity 2 and Data 9 are used to rebuild Data 8 in the third stripe.

Once the RAID array is virtually reassembled, the file system or volume is scanned for corruption. In addition to file system corruption, engineers are also looking for data that is not consistent or out of date. This occurs when there is a gap of time between drive failures and one of the drives is degraded. Data recovery engineers need experience in recognizing this type of damage so they can virtually repair the volume and extract good file data.

Data Recovery from multiple failed drives

It is possible to get a full recovery from a RAID 6 array even if there are more than two drive failures.

In the example above, we have a RAID 6 array with damage on some areas of all five disks. If there are no more than two failed blocks per stripe, it is possible to rebuild the missing data. Ontrack will image as much of each drive as possible.

Then the array is virtually rebuilt using those images. In the above example, Data 1, Data 3 and Parity 2 from stripe one is used to rebuild Data 2. No parity is needed for stripe 2 as Data 4, Data 5 and Data 6 are all intact. Data 7, Parity 2 and Data 8 are used to rebuild Data 9 in the third stripe.

Once the RAID array is virtually reassembled, the file system or volume is scanned for corruption. Recoverable data is extracted from the virtually rebuilt array to new media to be put back into production.

Singapore | English

Singapore | English

Locations

Locations

Search

Search

Back to listing

Back to listing